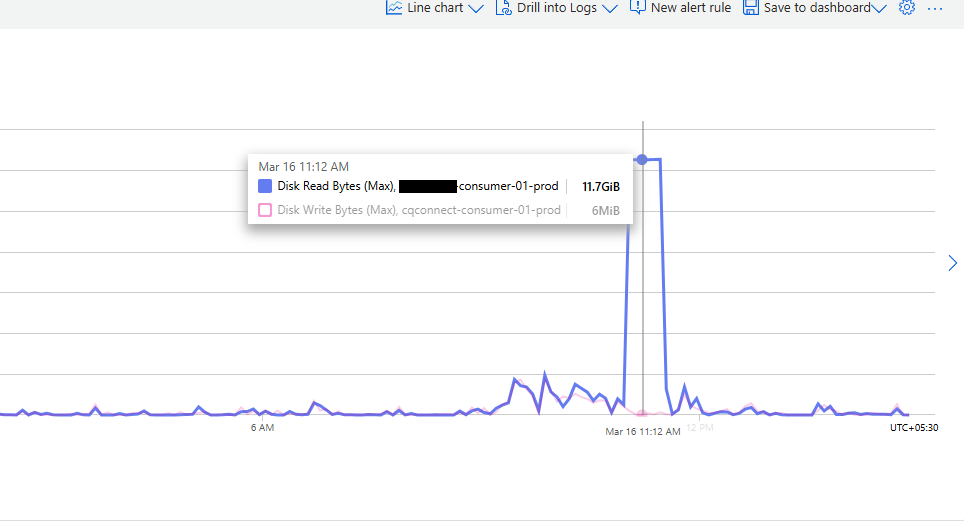

Before I ever thought of creating a Linux disk I/O monitoring tool, I got this screenshot—and a mystery to solve:

A developer pinged me with a screenshot and a cry for help:

“We’re getting high IOPS alerts on our production VM, but we have no idea what’s causing it.”

This reminded me of the importance of proactive disk space monitoring to prevent such issues.

The disk read activity spiked to 11.7 GiB/s—an unusually high number for that setup. The alert was legit, but the problem? We had zero visibility into which process or user was responsible. No trace in logs. No obvious culprit in running processes. And by the time anyone looked, the spike was gone.

That was the day Spike Sleuth was born.

The Need: A Linux Disk I/O Monitoring Tool That Catches the Culprit in Real-Time

As a systems guy, you know disk I/O spikes can wreak havoc—slowdowns, missed SLAs, poor user experience. But knowing a spike happened is only half the story. What matters is who or what caused it. I needed a tool—a Linux disk I/O monitoring tool—that could:

- Detect sudden spikes in disk usage

- Tell me which process, user, and file were behind it

- Run in the background with minimal footprint

- Not become a part of the problem

First Thoughts: iostat, lsof, and Minimal Interference

The first question was: How can I catch the spike as it happens, not after it’s over?

💡 Plan:

- Use

iostatto monitor read/write throughput. - When a spike is detected, grab a snapshot using

lsofandiotopto see what processes and files are active. - Log the findings.

iostat gave me this:

iostat -d -k 1 2 | grep 'sda'

And lsof was the hammer to catch file access:

lsof /dev/sdaBut here’s where it gets interesting…

Logging Can Be a Spike Too 😅

At first, I considered logging everything all the time—but then it hit me. Constant logging means constant disk writes, which could:

- Mask the actual spike

- Be mistaken for a spike by monitoring tools

- Introduce overhead on already stressed disks – (This is similar to challenges faced when managing disk space in server environments.)

So, I decided to go event-based: only log when a certain threshold is crossed. This became the foundation of the first Spike Sleuth script. Default threshold? 5GB/s (configurable).

READ_THRESHOLD=5000 # in MB/s

The “One Spike, One Log” Problem

Once I had it working, I noticed another flaw.

Let’s say a spike happens at 11:12 AM. The tool logs it once. Cool.

But what if another process jumps into the I/O frenzy 2 seconds later?

We miss that.

So I enhanced the script to stay in logging mode for a few seconds after a spike, capturing all I/O offenders—not just the first.

# Inside spike-sleuth.sh

if (( current_read > READ_THRESHOLD )); then

if [ "$in_spike" != true ]; then

in_spike=true

spike_end_time=$(( $(date +%s) + HOLD_LOG_DURATION ))

log_spike

fi

else

if [ "$in_spike" = true ] && [ "$(date +%s)" -gt "$spike_end_time" ]; then

in_spike=false

fi

fi

Making It Stick: From Script to Systemd Service

Developers loved the idea—but then came the “how do I keep it running?” questions. I didn’t want anyone to:

- Keep terminals open forever

- Remember to

nohupit - Run crons or screen sessions

That’s when I made the leap to a proper systemd service.

sudo ./spike-sleuth.sh install

Boom. Now it:

- Starts on boot

- Restarts if it crashes

- Logs automatically

- Respects thresholds

Check status anytime:

sudo ./spike-sleuth.sh status

Polishing the Experience

To make Spike Sleuth truly production-ready, I added:

- 🌀 Log rotation: So it doesn’t fill up disks

- 🔧 Configurable environment vars: Adjust thresholds, intervals, log paths

- 🔍 Debug mode: For when you need to know why something’s not working

- 🧹 Retention policy: Delete old logs after X days

READ_THRESHOLD=1000 \

INTERVAL=10 \

LOG_FILE="/var/log/spike.log" \

MIN_PROCESS_THRESHOLD=200 \

LOG_RETENTION=7 \

sudo ./spike-sleuth.sh install

Sample Output

[2024-03-20 14:30:45] 🚨 Disk I/O Spike Detected (PID: 1234, Rate: 1234.5 MB/s) 🚨

----------------------------------------

🔹 Process details (iotop):

1234 user1 123.4G/s /usr/bin/some-process

🔹 Open files for PID 1234 (lsof):

n1234 /path/to/file1

n1234 /path/to/file2

==========================================

The emojis? Because ops can be fun too 😎

The Payoff: Less Guessing, More Knowing

We eventually traced the 11.7 GiB/s spike to a batch process doing aggressive reads from a poorly indexed dataset. Spike Sleuth nailed it. Without it, we would’ve been left guessing.

Since then, it’s helped us debug high I/O issues across multiple environments—production, staging, and even CI runners.

Final Thoughts

If you’ve ever been woken up by an alert that says, “High disk read on VM-xyz,” but gives you nothing else—Spike Sleuth is for you. It’s a no-fuss, real-time Linux disk I/O monitoring tool that catches the culprits in the act, logs the details, and doesn’t get in your way.

You can grab it here:

👉 https://github.com/rick001/spike-sleuth

Feel free to fork it, tweak it, or drop a star if you found it useful.

Want to see how it works under the hood? Here’s the full Bash script that powers it all:

/#!/bin/bash

# Default configuration

READ_THRESHOLD=${READ_THRESHOLD:-5000} # 5GB/sec

LOG_FILE=${LOG_FILE:-"/var/log/disk_io_spike.log"}

INTERVAL=${INTERVAL:-5}

DEBUG=${DEBUG:-false}

MIN_PROCESS_THRESHOLD=${MIN_PROCESS_THRESHOLD:-500} # MB/s

MAX_LOG_SIZE=${MAX_LOG_SIZE:-10485760} # 10MB

LOG_RETENTION=${LOG_RETENTION:-7} # days

# Service configuration

SERVICE_NAME="spike-sleuth"

SERVICE_FILE="/etc/systemd/system/${SERVICE_NAME}.service"

INSTALL_DIR="/usr/local/bin"

SPIKE_ACTIVE=false

declare -A LOGGED_PIDS # Tracks already logged PIDs during spike

LAST_CLEANUP=$(date +%s)

# Debug function

debug() {

if [ "$DEBUG" = "true" ]; then

echo "[DEBUG] $1"

fi

}

# Signal handler for graceful shutdown

cleanup() {

echo "Shutting down spike-sleuth..."

exit 0

}

# Rotate log file if it exceeds MAX_LOG_SIZE

rotate_log() {

if [ -f "$LOG_FILE" ] && [ $(stat -c %s "$LOG_FILE") -gt "$MAX_LOG_SIZE" ]; then

mv "$LOG_FILE" "${LOG_FILE}.$(date +%Y%m%d%H%M%S)"

touch "$LOG_FILE"

fi

}

# Clean up old log files

cleanup_old_logs() {

find "$(dirname "$LOG_FILE")" -name "disk_io_spike.log.*" -mtime +$LOG_RETENTION -delete

}

# Clean up old PIDs from tracking

cleanup_old_pids() {

local current_time=$(date +%s)

if [ $((current_time - LAST_CLEANUP)) -gt 3600 ]; then # Cleanup every hour

for pid in "${!LOGGED_PIDS[@]}"; do

if ! ps -p "$pid" > /dev/null 2>&1; then

unset LOGGED_PIDS["$pid"]

fi

done

LAST_CLEANUP=$current_time

fi

}

log_io_info() {

local pid=$1

local read_rate=$2

# Rotate log if needed

rotate_log

TIMESTAMP=$(date '+%Y-%m-%d %H:%M:%S')

echo "[$TIMESTAMP] 🚨 Disk I/O Spike Detected (PID: $pid, Rate: $read_rate MB/s) 🚨" >> "$LOG_FILE"

echo "----------------------------------------" >> "$LOG_FILE"

echo "🔹 Process details (iotop):" >> "$LOG_FILE"

iotop -boanP -d 2 -n 2 | grep -w "^$pid" >> "$LOG_FILE"

echo "" >> "$LOG_FILE"

echo "🔹 Open files for PID $pid (lsof):" >> "$LOG_FILE"

lsof -p "$pid" +D / -Fn | grep -vE "(mem|DEL|txt|cwd|rtd)" | head -20 >> "$LOG_FILE"

echo "" >> "$LOG_FILE"

echo "==========================================" >> "$LOG_FILE"

echo "" >> "$LOG_FILE"

}

# Installation functions

install_service() {

if [ "$(id -u)" -ne 0 ]; then

echo "Error: Installation must be run as root"

exit 1

fi

echo "Installing spike-sleuth service..."

# Create service file

cat > "$SERVICE_FILE" << EOF

[Unit]

Description=Spike Sleuth Disk I/O Monitoring Service

After=network.target

[Service]

Type=simple

ExecStart=$INSTALL_DIR/$SERVICE_NAME

Restart=always

RestartSec=5

Environment="READ_THRESHOLD=$READ_THRESHOLD"

Environment="LOG_FILE=$LOG_FILE"

Environment="INTERVAL=$INTERVAL"

Environment="MIN_PROCESS_THRESHOLD=$MIN_PROCESS_THRESHOLD"

Environment="MAX_LOG_SIZE=$MAX_LOG_SIZE"

Environment="LOG_RETENTION=$LOG_RETENTION"

[Install]

WantedBy=multi-user.target

EOF

# Copy script to installation directory

cp "$0" "$INSTALL_DIR/$SERVICE_NAME"

chmod +x "$INSTALL_DIR/$SERVICE_NAME"

# Reload systemd and enable service

systemctl daemon-reload

systemctl enable "$SERVICE_NAME"

echo "Installation complete. To start the service, run:"

echo " sudo systemctl start $SERVICE_NAME"

}

uninstall_service() {

if [ "$(id -u)" -ne 0 ]; then

echo "Error: Uninstallation must be run as root"

exit 1

fi

echo "Uninstalling spike-sleuth service..."

# Stop and disable service

systemctl stop "$SERVICE_NAME" 2>/dev/null

systemctl disable "$SERVICE_NAME" 2>/dev/null

# Remove files

rm -f "$SERVICE_FILE"

rm -f "$INSTALL_DIR/$SERVICE_NAME"

# Reload systemd

systemctl daemon-reload

echo "Uninstallation complete."

}

# Check if required commands are available

check_dependencies() {

for cmd in iostat iotop lsof systemctl; do

if ! command -v "$cmd" >/dev/null 2>&1; then

echo "Error: Required command '$cmd' is not installed."

exit 1

fi

done

}

# Main monitoring function

monitor() {

# Check if running as root

if [ "$(id -u)" -ne 0 ]; then

echo "Error: This script must be run as root"

exit 1

fi

check_dependencies

# Create log directory if it doesn't exist

mkdir -p "$(dirname "$LOG_FILE")"

# Set up signal handlers

trap cleanup SIGINT SIGTERM

# Initial cleanup of old logs

cleanup_old_logs

# Log initial setup confirmation

TIMESTAMP=$(date '+%Y-%m-%d %H:%M:%S')

echo "[$TIMESTAMP] ✅ Spike Sleuth setup complete. Monitoring started." >> "$LOG_FILE"

echo "----------------------------------------" >> "$LOG_FILE"

echo "Configuration:" >> "$LOG_FILE"

echo "- Read threshold: $READ_THRESHOLD MB/s" >> "$LOG_FILE"

echo "- Log file: $LOG_FILE" >> "$LOG_FILE"

echo "- Check interval: $INTERVAL seconds" >> "$LOG_FILE"

echo "- Minimum process threshold: $MIN_PROCESS_THRESHOLD MB/s" >> "$LOG_FILE"

echo "- Max log size: $((MAX_LOG_SIZE/1024/1024))MB" >> "$LOG_FILE"

echo "- Log retention: $LOG_RETENTION days" >> "$LOG_FILE"

echo "==========================================" >> "$LOG_FILE"

echo "" >> "$LOG_FILE"

while true; do

# Get disk read rate in MB/s

READ_MB=$(iostat -dmx 1 2 | awk 'END{print $6}')

debug "Raw iostat output: $READ_MB"

# Check if we got a valid number

if [[ "$READ_MB" =~ ^[0-9]+(\.[0-9]+)?$ ]]; then

READ_INT=${READ_MB%.*}

debug "Parsed read rate: $READ_INT MB/s"

if [ "$READ_INT" -ge "$READ_THRESHOLD" ]; then

SPIKE_ACTIVE=true

debug "Spike detected: $READ_INT MB/s"

# Get top high-read process IDs

TOP_PIDS=$(iotop -boanP -d 1 -n 1 | awk -v threshold="$MIN_PROCESS_THRESHOLD" '

NR>7 && $4 ~ /[MG]\/s/ {

split($4,a," ");

if(a[2]=="G/s" || (a[2]=="M/s" && a[1]>=threshold)) print $1

}')

debug "Top PIDs: $TOP_PIDS"

for pid in $TOP_PIDS; do

if [ -z "${LOGGED_PIDS[$pid]}" ]; then

log_io_info "$pid" "$READ_INT"

LOGGED_PIDS[$pid]=1

fi

done

else

# Reset state and PID tracking when spike ends

if [ "$SPIKE_ACTIVE" = true ]; then

SPIKE_ACTIVE=false

unset LOGGED_PIDS

declare -A LOGGED_PIDS

debug "Spike ended"

fi

fi

# Clean up old PIDs periodically

cleanup_old_pids

else

debug "Invalid read rate value: $READ_MB"

fi

sleep "$INTERVAL"

done

}

# Main script logic

case "$1" in

install)

install_service

;;

uninstall)

uninstall_service

;;

start)

systemctl start "$SERVICE_NAME"

;;

stop)

systemctl stop "$SERVICE_NAME"

;;

status)

systemctl status "$SERVICE_NAME"

;;

*)

monitor

;;

esac